The United States government recently announced stringent new regulations governing exports of artificial intelligence (AI) chips and technology. This initiative represents a significant step in the ongoing effort to regulate and control the global distribution of advanced computing resources, particularly in light of the heightened tensions between the U.S. and countries like China, Russia, Iran, and North Korea. As the landscape of AI and technology continues to evolve, these regulations not only aim to preserve America’s leading position in AI but also to mitigate potential risks associated with advanced technology in the hands of adversarial nations.

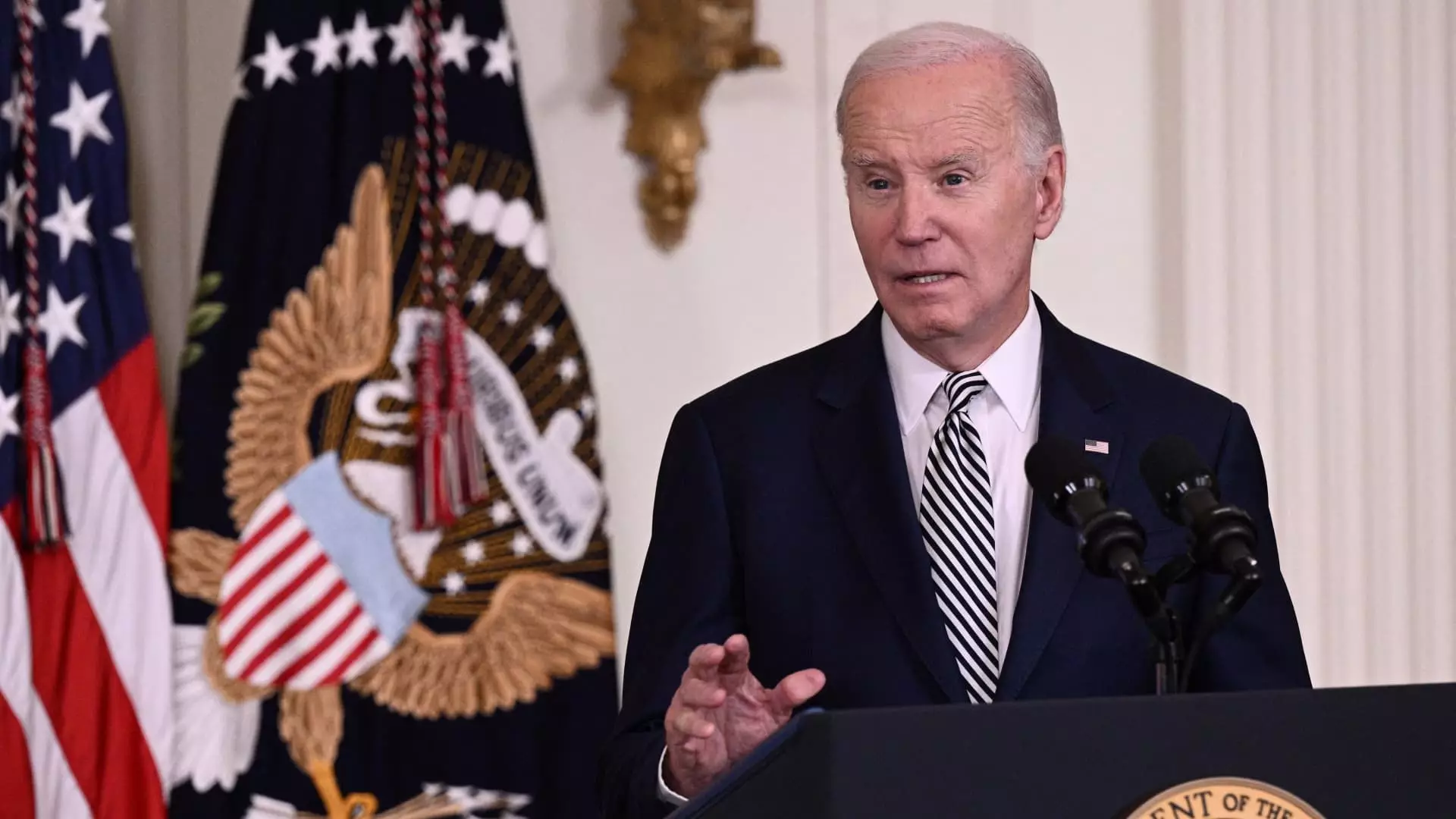

The regulatory framework introduced by the outgoing Biden administration will impose strict limits on the type and volume of AI chips that can be exported to various international destinations. The policy delineates a clear hierarchy of nations, categorizing them into three tiers based on their relationships and perceived threats to U.S. national security. At the top tier, approximately 18 countries, including allies like Japan, the United Kingdom, South Korea, and the Netherlands, will enjoy exemptions from the more stringent export restrictions. In contrast, around 120 other nations, including but not limited to Singapore and Israel, will face export caps, while hostile entities like China and Russia will be outright banned from receiving advanced AI technologies.

This division reflects the U.S. strategy to ensure that cutting-edge technology remains under the control of trusted allies, serving both economic and security objectives. By controlling the global flow of AI chips and technologies, the U.S. aims to bolster its position as a leader in AI development, reducing the likelihood that adversarial nations could gain insights or capabilities that might enhance their military or cyberwarfare capabilities.

The new regulations, scheduled to take effect in approximately 120 days, will especially affect the advanced graphics processing unit (GPU) market—essential for powering data centers integral to AI training. Notably, companies such as Nvidia and Advanced Micro Devices will be at the forefront of compliance, having to navigate a complex landscape of licensing requirements and country-specific quotas. Major cloud service providers, including tech giants like Microsoft, Google, and Amazon, will benefit from a unique provision allowing them to seek global authorizations for building data centers, thus exempting their projects from these export caps. However, these companies must meet stringent security requirements to earn such permits, reflecting the government’s serious commitment to national security.

This complex regulatory dynamic has elicited concerns from industry leaders, who argue that such sweeping regulations could stifle innovation and consolidate market power among competitors. Nvidia, for example, referred to the policy as “sweeping overreach,” suggesting that it could hinder technological progress in areas where AI development is burgeoning. Critics claim that excessive restrictions could inadvertently empower China and other countries to fill the gaps left by U.S. companies and potentially accelerate their own advancements in AI technologies.

The implications of the U.S. government’s regulatory actions extend far beyond economic interests; they resonate deeply with national security considerations. U.S. National Security Adviser Jake Sullivan has emphasized the necessity to prepare for rapid advancements in AI capabilities, recognizing the transformative potential of AI in various sectors such as healthcare, education, and security. However, the dual-use nature of AI technologies raises significant ethical and security concerns. While AI offers myriad opportunities for societal benefits, it also presents severe risks, including facilitating cyberattacks, surveillance, and the development of autonomous weapons systems.

The potential applications of AI stretch into sensitive areas that could threaten human rights, necessitating cautious navigation and regulatory frameworks to mitigate these dangers. As countries around the world ramp up their AI capabilities, the U.S. must remain vigilant, not only to maintain its technological edge but also to safeguard democratic values and human rights on a global scale.

Looking ahead, the enforcement of these regulations under the forthcoming Trump administration presents a critical juncture. Despite differences in leadership, a consensus remains regarding the competitive threats posed by China in the realm of AI. As this regulatory landscape unfolds, ongoing dialogue between government representatives and industry stakeholders will be vital in crafting an approach that balances national security with the need for innovation and collaboration.

The latest U.S. export restrictions on AI technologies symbolize a pivotal moment in the global race for technological supremacy. As countries are categorized into tiers based on trust and threat assessments, the implications for international trade and cooperation become pronounced. With the stakes rising, the need for adaptive strategies and robust alliances will be essential to navigate the complexities of the evolving AI landscape, shaping both the future of technology and geopolitical relations for years to come.

Leave a Reply