In an era where artificial intelligence increasingly permeates our lives, a notable development comes from Microsoft with the introduction of Mu—a cutting-edge AI model engineered to run directly on devices. This innovation is not merely a puffed-up addition to the Windows ecosystem; rather, it signifies a shift toward decentralized computation, prioritizing user autonomy. The advantage of local execution drains authority from centralized servers, ushering in a new chapter of user privacy and speed, while still maintaining Microsoft’s vested interest in advancing AI capabilities.

Unveiling Localized AI Agents

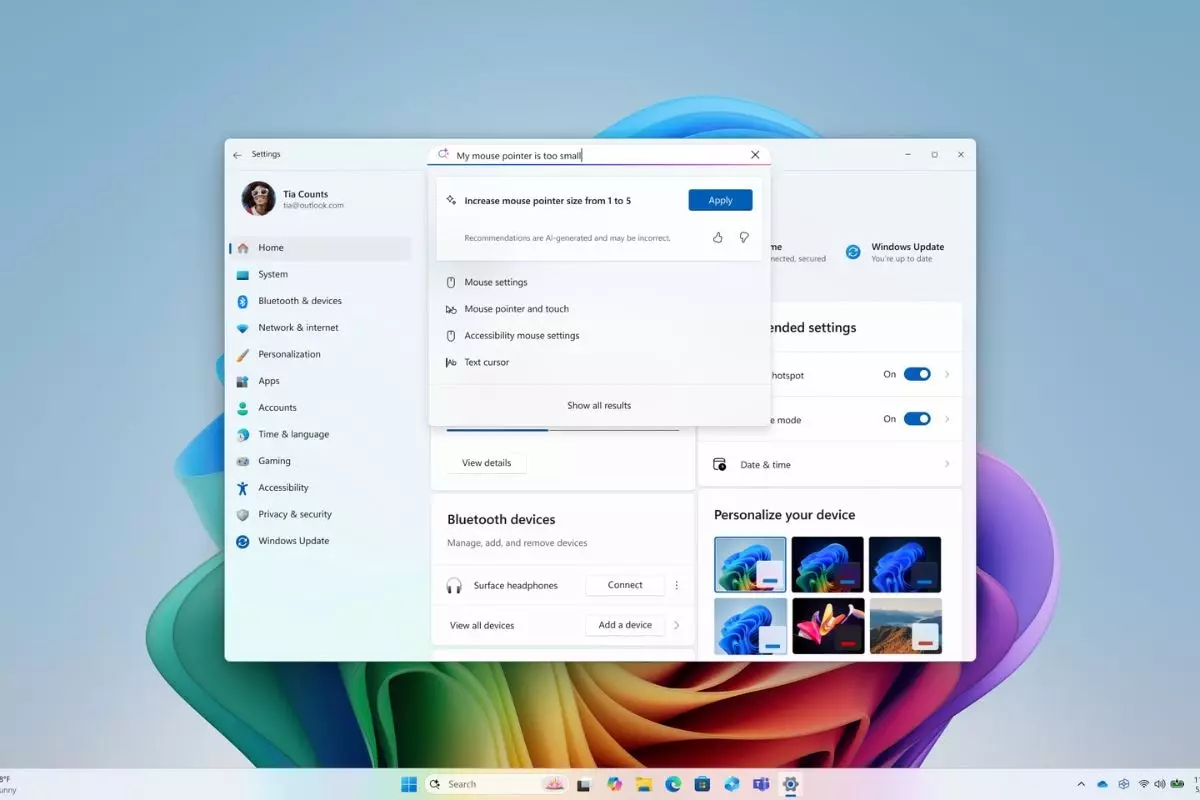

Last week, Microsoft unveiled a range of new features in Windows 11, including an intriguingly sophisticated AI agent system nested within the Settings menu. Users can now articulate their needs in natural language, allowing Mu to either direct them to the relevant settings or enact changes autonomously. The democratization of technology, empowering users to interact with their devices in a colloquial manner, should not be underestimated. Yet, with this power comes responsibility—developers and users alike must remain vigilant to the implications of employing AI in user-facing roles.

The marvel of Mu is that it leverages the device’s neural processing unit (NPU) to perform complex tasks without needing a cloud connection, establishing a more efficient and responsive user experience. Villains lurk in the shadows of reliance on server-based AI, where latency and privacy breaches stir discomfort. Here, Microsoft has prided itself on crafting a more secure method to enhance user experience through local computation.

The Technical Triumph of Mu

Delving deeper into the workings of Mu, one finds a meticulously designed small language model (SLM) underpinned by a transformer-based architecture comprising 330 million token parameters. The choice of a smaller model is noteworthy; in the past, technological giants leaned heavily towards larger models, succumbing to increased operational costs and environmental impacts. Here, Microsoft demonstrates a leaner, ecologically mindful approach, refining parameters and optimizing efficiency tailored to the NPU’s specifications.

By employing distilled training from Microsoft’s Phi series, Mu illustrates an extraordinary evolution: a model that performs on par with larger predecessors yet occupies significantly less digital real estate. This paradigm shift underlines a critical realization for AI development—efficiency doesn’t have to compromise output quality. It raises a provocative question: can smaller, well-optimized models replace their larger counterparts across varied applications?

Language Nuance: Balancing Precision and User Experience

However, this progress is not without its challenges. The AI model must grapple with the intricacies of human language, often a maze of ambiguity and nuance. Microsoft identified that Mu thrives with multi-word requests, while simpler prompts can leave it scrambling for relevance. The fact that “lower screen brightness at night” yields better results than merely “brightness” illustrates a keen insight into user behavior, emphasizing the importance of context.

To compensate for less effective single-word requests, Microsoft continues to provide traditional keyword-based search results, ensuring users aren’t left stranded in technologic confusion. This dual-system approach affords users the best of both worlds: natural language processing that smoothly interfaces with the conventional paradigms they are accustomed to. The balancing act of refining AI responsiveness against the backdrop of diverse user queries is one that requires constant adaptation and a commitment to user feedback.

The Road Ahead: Continuous Improvement and User-Centric Design

Microsoft’s proactive stance extends to issues of commonality in settings. For example, when a command like “increase brightness” could apply to both a computer screen and an external monitor, Mu emphasizes the more frequently used applications to enhance accuracy. The meticulous scaling up of training data—from dozens to hundreds of settings—reflects a comprehensive approach to AI development rooted in user need.

While the thrill of innovation often sweeps us off our feet, it is critical to engage with the ethical and logistical considerations that accompany such advancements. With the promise of a localized AI that adapts to individual user semantics, we must challenge ourselves—how do we ensure that these tools remain accessible and intuitively integrated into our lives, without the pitfalls of over-centralization? The journey ahead will demand not only technological dexterity but also a steadfast commitment to addressing the ethical ramifications of AI development in a rapidly evolving digital landscape.

Leave a Reply