OpenAI has officially unveiled its innovative AI video generation model, Sora, marking a significant leap in the realm of artificial intelligence and creative expression. Initially previewed to a select group back in February, the model has finally launched in full, accompanied by a more advanced variant known as Sora Turbo. With Sora, users can generate high-definition videos with striking ease, and the release signals OpenAI’s ongoing commitment to expanding the boundaries of AI technology.

Sora allows users to create videos in a stunning 1080p resolution, with a duration of up to 20 seconds for each video. This capability opens up exciting possibilities for content creators, marketers, and educators looking to enhance their visual storytelling. Currently, Sora is hosted on a standalone website and is exclusively accessible to premium subscribers of ChatGPT, marking a strategic move by OpenAI to offer value-added services to its paying customers.

It is important to note that access is tightly controlled, with the model available only to ChatGPT Plus and Pro subscribers. To stimulate further engagement among users, those in the Plus tier can generate up to 50 videos per month at lower resolutions, while Pro subscribers enjoy significantly enhanced usage limits and quality options. While OpenAI has outlined some of the fundamental offerings, it has been vague about what constitutes “higher resolutions” and “longer durations,” leading to questions about the full scope of features provided under the Pro subscription.

Technical Underpinnings of Sora

OpenAI describes Sora as a diffusion model, which means it possesses the ability to analyze multiple frames concurrently to maintain a consistent narrative throughout the brief video durations. The integration of transformer architecture exemplifies Sora’s sophisticated design, while aspects derived from DALL-E 3 enhance its image captioning capabilities, allowing for a harmonious blend of visuals and text prompts.

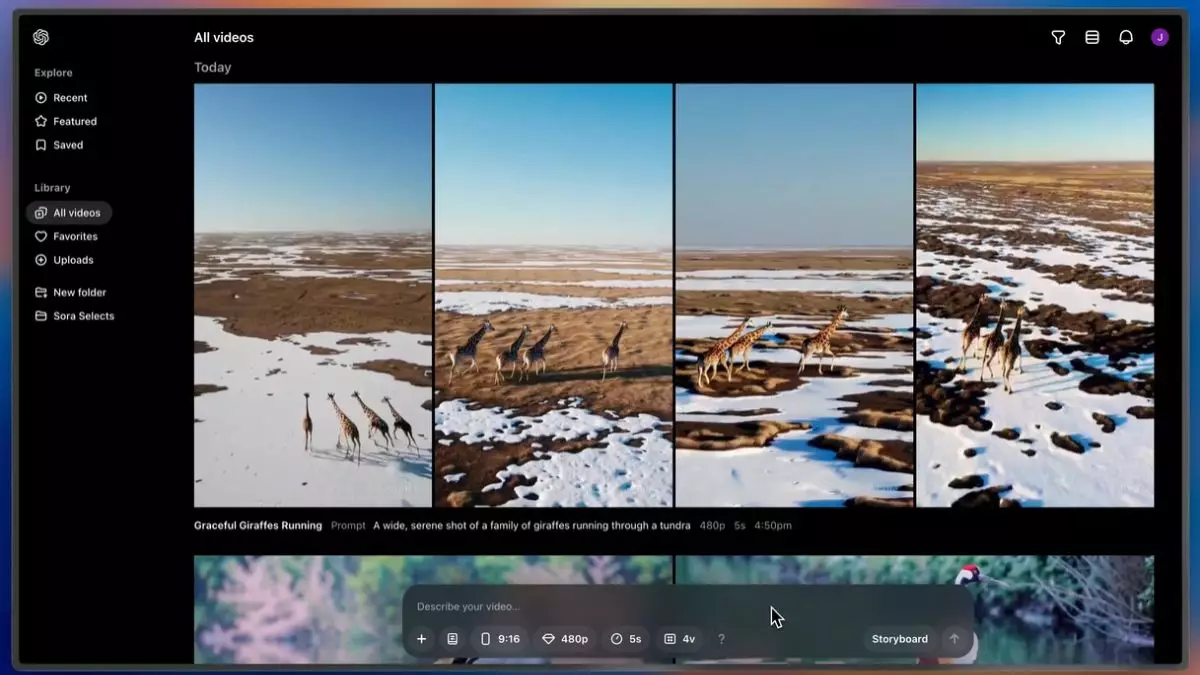

The originality of content creation is further displayed through Sora’s storyboard interface, which permits users to curate specific inputs for each frame, thus granting them unparalleled control over their video production process. Whether generating videos from scratch or remixing existing media, Sora empowers users to explore their creativity in unprecedented ways.

In constructing Sora, OpenAI has gathered a rich dataset from diverse sources, including public domain content, proprietary partnerships, and curated resources from contributors and artists. Companies like Shutterstock and Pond5 have aided in developing a proprietary dataset that fuels the AI’s creative engine. This careful curation ensures that the AI is grounded in a comprehensive understanding of video content, enabling it to produce relatable and contextually appropriate outputs.

Moreover, OpenAI has taken commendable measures to proactively address the ethical implications of AI-generated media. The company has integrated visible watermarks and metadata into the videos created with Sora, adhering to standards established by the Coalition for Content Provenance and Authenticity (C2PA). This initiative aims to foster transparency and combat the rampant proliferation of misleading or harmful content. The presence of stringent guidelines further emphasizes the commitment to avoiding misuse of the technology, particularly in generating inappropriate or abusive content.

The launch of Sora represents a pivotal moment in the evolution of AI-assisted video generation. While OpenAI’s model pushes the envelope in terms of creativity and accessibility, it is equally crucial to recognize the responsibility that comes with such power. With features designed to spark creativity and a framework prioritizing ethical usage, Sora is positioned to make a lasting impact on how people create, share, and consume video content in the digital age. As users begin to navigate the capabilities of this new model, the potential for innovation in various industries beckons, igniting curiosity about the future of AI in creative fields.

Leave a Reply